The Digital Checkpoint: How Age Verification Is Killing the Anonymous Internet

The Trigger: Britain’s Online Safety Act Takes Effect

On 25 July 2025 the United Kingdom’s Online Safety Act’s most intrusive “children‑safety” provisions came into force. The legislation was passed in October 2023, but the law only now required every platform that serves UK users to implement “highly effective age assurance” for any content deemed potentially harmful, not just adult material.

Within days the biggest consumer services began rolling out mandatory identity checks:

- Reddit – UK users were prompted to upload a government‑issued ID photo or a selfie via the third‑party provider Persona.

- Discord – Launched a UK‑only flow that lets users verify age with either a live facial‑scan or an ID‑scan.

- Spotify – Required government‑document verification (or a facial‑age estimate) for access to certain 18+ music videos and explicit tracks.

These moves weren’t incremental; they represented a coordinated, rapid transformation of how the internet operates for UK residents.

The 72,000 Warning – A Breach That Foreshadowed Everything

A week earlier, on 25 July 2025, the women‑only dating‑advice app Tea announced a massive data breach. Hackers accessed ≈ 72,000 images, including users’ selfies and the government‑ID photos they had uploaded for verification. The breach underscored the paradox at the heart of age‑verification: data collected for safety can become a huge privacy liability when stored, even temporarily.

The Official Narrative vs. The Reality

Official line: Protect children from harmful online content.

What actually happened: The law forces any platform accessible in the UK: forums, cloud‑storage services, delivery‑app review sections, gaming chats, you name it, to collect real‑world identity data. Failure to comply can mean fines up to £18 million or 10 % of global revenue, whichever is higher, and even criminal charges for senior executives.

Because the regulation applies to any “potentially harmful” material, companies are opting for the most restrictive, globally‑applied solutions rather than building UK‑specific, privacy‑preserving systems.

From Historical Precedent to Digital Dystopia

The UK isn’t inventing surveillance. In the 1840s the British postal service operated a secret department that opened letters of political dissidents – including those of Italian revolutionary Giuseppe Mazzini – under the pretext of national security. Today, the same logic drives a modern, digital version of that intrusion.

Other nations are following suit:

Canada's Bill C-63 expanded police surveillance powers, allowing warrantless access to user identity and login history while mandating backdoors in messaging apps.

Australia banned social media access for anyone under 16, requiring face scans and government IDs for verification, with plans to extend these controls to search engines.

The European Union advanced its "Chat Control" proposal, requiring platforms to automatically scan all private messages, emails, and stored files, effectively ending encrypted communication.

Multiple US states implemented their own age verification requirements, forcing platforms to adopt global policies rather than manage regional differences.

As noted in global analysis, similar laws are already spreading: Germany's NetDG law forces social networks to delete "illegal speech" under threat of fines. Australia approved its own Online Safety Act in 2021, giving a safety commissioner power to order content removal and even block websites with "harmful" material.

This synchronized rollout wasn't coincidence. It was the the logical outcome of years of quiet pressure to normalize pervasive identity checks.

How the New Verification Systems Work (and Why They’re Dangerous)

- Document Upload – Full scans of passports, driver’s licences, or national IDs, capturing address, birthdate, and even physical attributes unrelated to age.

- Biometric Scanning – Live facial‑recognition that records and stores facial geometry, micro‑expressions, and other biometric traits.

- Banking Integration – Credit‑card validation or open‑banking links that tie financial histories to online behaviour.

- Behavioral AI – Continuous monitoring of browsing patterns, engagement metrics, and content consumption to infer age and enforce restrictions.

All of these create massive, centralized databases of highly sensitive personal data, with little oversight and unclear security guarantees.

The Technical Reality: Constant Content Scanning

To meet the Act’s obligations, platforms must inspect every piece of user‑generated content – texts, images, videos – for “harmful” material before it’s posted or shared. This requirement collides head‑on with end‑to‑end encryption. Services like WhatsApp and Signal have warned they will withdraw from the UK rather than embed on‑device scanning that effectively turns phones into government‑sanctioned spyware.

The Chilling Effect

When every word, image, or video can be flagged and potentially punished, users self‑censor. Activists, whistleblowers, and ordinary citizens begin to avoid expressing controversial opinions, fearing legal repercussions or data exposure.

Human Rights Watch and the Electronic Frontier Foundation have both labeled the UK law a “deeply flawed censorship proposal” and an “enormous threat to privacy and online security.” Their concern: once a precedent is set, authoritarian regimes can adopt the same framework with fewer safeguards.

The Security Nightmare Illustrated by the Tea Breach

The Tea breach proved that even “temporary” storage of verification data is risky. Once the images and IDs were exfiltrated, they appeared on underground forums, enabling identity theft, stalking, and targeted phishing. The incident demonstrates that any system that aggregates large volumes of personal identifiers becomes a lucrative target for attackers.

Why This Isn’t Just About Child Protection

Age‑verification systems, as they stand, create a nationwide surveillance infrastructure that tracks every user, regardless of age. The consequences are profound:

- Anonymity disappears – Every interaction ties back to a real identity.

- Self‑censorship spikes – Knowing you’re identifiable discourages dissent.

- Whistleblowing becomes perilous – Leaks can be traced to individuals.

- A market for verification services – Companies that store billions of IDs become high‑value data brokers.

There Are Better Ways

If governments actually wanted to protect children while preserving privacy, the technology exists:

Zero-Knowledge Proofs: Systems that mathematically verify age without revealing identity, birthdate, or personal information. Companies like UT and Privately offer age checks using zero-knowledge proofs where systems confirm you're over 18 without storing or knowing your birth date or real name.

Local AI Estimation: On-device age estimation that analyzes selfies without uploading images to servers.

Anonymous Verification Tokens: Licensed third-party age verifiers could issue cryptographic tokens that say "this person passed age verification" while carrying no identifying data.

Payment-Based Verification: Anonymous prepaid systems that restrict access based on age without requiring personal information.

None of these methods were prioritized because the goal was never just age verification.

Immediate Actions You Can Take

- Switch to No‑Logs VPNs – Proton VPN, Mullvad, IVPN, or Windscribe.

- Adopt Tor – Install the Tor Browser (desktop) or Orbot (Android) and use bridges that mimic regular traffic.

- Support Digital‑Rights Groups – Donate to or volunteer with the EFF, Access Now, or the Open Rights Group, which are litigating against the UK law.

- Educate Others – Share guides on how to use privacy‑preserving tools; the more people adopt them, the harder it becomes for blanket surveillance to succeed.

Long‑Term Strategy: Decentralization

Centralized platforms are the easiest targets for government mandates. Decentralized alternatives sidestep the checkpoint model entirely:

- SimpleX – Server‑less, end‑to‑end encrypted messaging.

- IPFS – Distributed file storage that removes a single point of control.

- Matrix – Federated communication where each server enforces its own policies.

- Mesh Networks (e.g., Meshtastic) – Peer‑to‑peer radio networks that operate outside the internet backbone.

Investing time in these tools builds a resilient ecosystem that can’t be forced into a single national compliance regime.

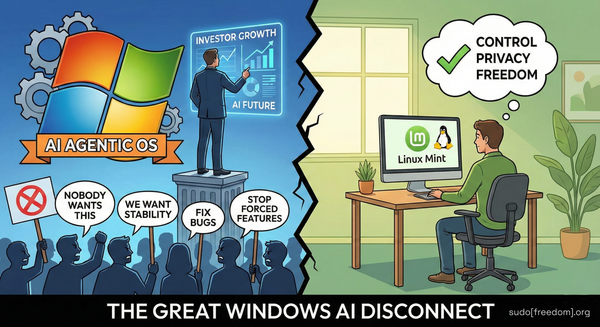

Why We Built sudo[freedom].org

The surge of age‑verification mandates highlighted the urgent need for a central hub that equips everyday users with concrete, privacy‑first alternatives. sudo[freedom].org provides:

- Step‑by‑step guides for installing privacy‑focused Linux distributions.

- Instructions for setting up personal home servers that keep your data under your control.

- Tutorials on mesh networking, decentralized messaging, and anonymous payment methods.

- Workshops and refurbished‑computer programs for underserved communities.

All content is written for non‑technical audiences, ensuring that anyone can reclaim digital sovereignty without sacrificing usability.

The Choice Ahead

The Online Safety Act presents a stark fork in the road:

- Accept the new normal – Live with ubiquitous ID checks, data collection, and the ever‑growing risk of breaches.

- Resist and rebuild – Adopt privacy tools now, support decentralized services, and push back through legal and civic channels.

History shows that technology alone won’t safeguard freedom; organized, informed communities will. The tools exist, the knowledge is out there, and the moment to act is now, before the surveillance infrastructure hardens further.

Your data, your rules. Your devices, your control. Your digital life, your choice.

Ready to start reclaiming your digital freedom? Visit sudo[freedom].org for practical guides, tools, and resources to escape the surveillance web and build a better, privacy‑first future.

![sudo[freedom].org](https://sudofreedom.org/content/images/2025/08/sudo.png)

![sudo[freedom]](/content/images/size/w160/2025/08/square.svg)